PDEOpNet

PDEOpNet - EECS 351

Overview

Our goal in this project was to harness the power of learning based methods to solve different families of partial differential equations. While traditional numerical methods require complicated finite approximations, learning based methods can offer dramatically faster solutions - for example, models have been developed to solve the Navier Stokes equation 1000 times faster than normal solvers. The implications of this speedup are tremendous - many of these PDEs are used to model complex phenomena, but with traditional solving methods, it becomes computationally challenging when the scale of the data is expanded, even with the most advanced supercomputers. Navier Stokes specifically can be used to model weather patterns, and an intense speedup can have a significant impact on the ability to model these patterns accurately and efficiently at a global scale.

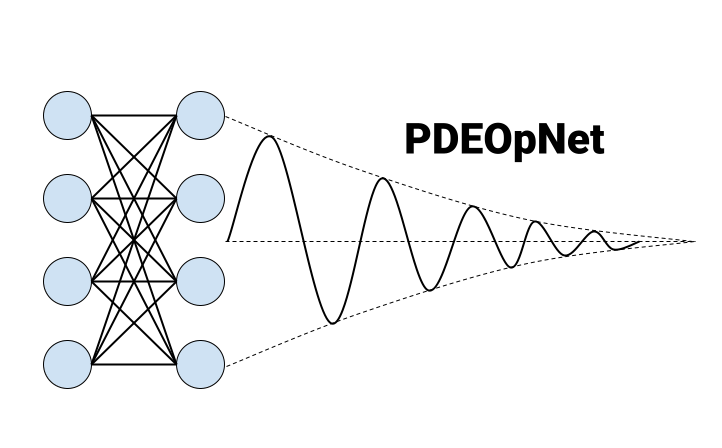

For our specific use case, we decided to attempt to solve the heat equation as our first PDE family, since it has a closed form solution we could use to generate our own data and compare our model based solutions to. The research in this field of learning based methods is cutting edge and constantly changing, and we decided to explore two of the newest models - PINNs and neural operators. PINNs, or physics informed neural networks, are a branch of neural networks that introduce information about a physical process into the model training by adding a physics based term to the loss function. While developing a PINN model for the 2D heat equation, we also decided to use Fourier representations of our data to capture higher frequency information. We did this by creating a custom Fourier layer that transformed our inputs to capture the frequency distribution. In parallel to our work with PINNs, we also decided to explore an approach with neural operators, neural networks that map between continuous function spaces rather than just discrete spaces like vectors and matrices. These models have been shown to be more flexible and generalizable than PINNs, making them easier to optimize, especially with the introduction of new inputs. The majority of our project was spent researching and implemeting these two approaches (PINNs and neural operators), and a more detailed report of our methods, results, data, and references can be found in the other sections of our site.